Two online worlds, AI (Artificial Intelligence) and Snapchat, are colliding. It may seem unsurprising that this crossover would occur, but Snapchat is one of the first platforms to utilize an AI feature since Facebook adopted it back in 2013.

How AI works in Snapchat

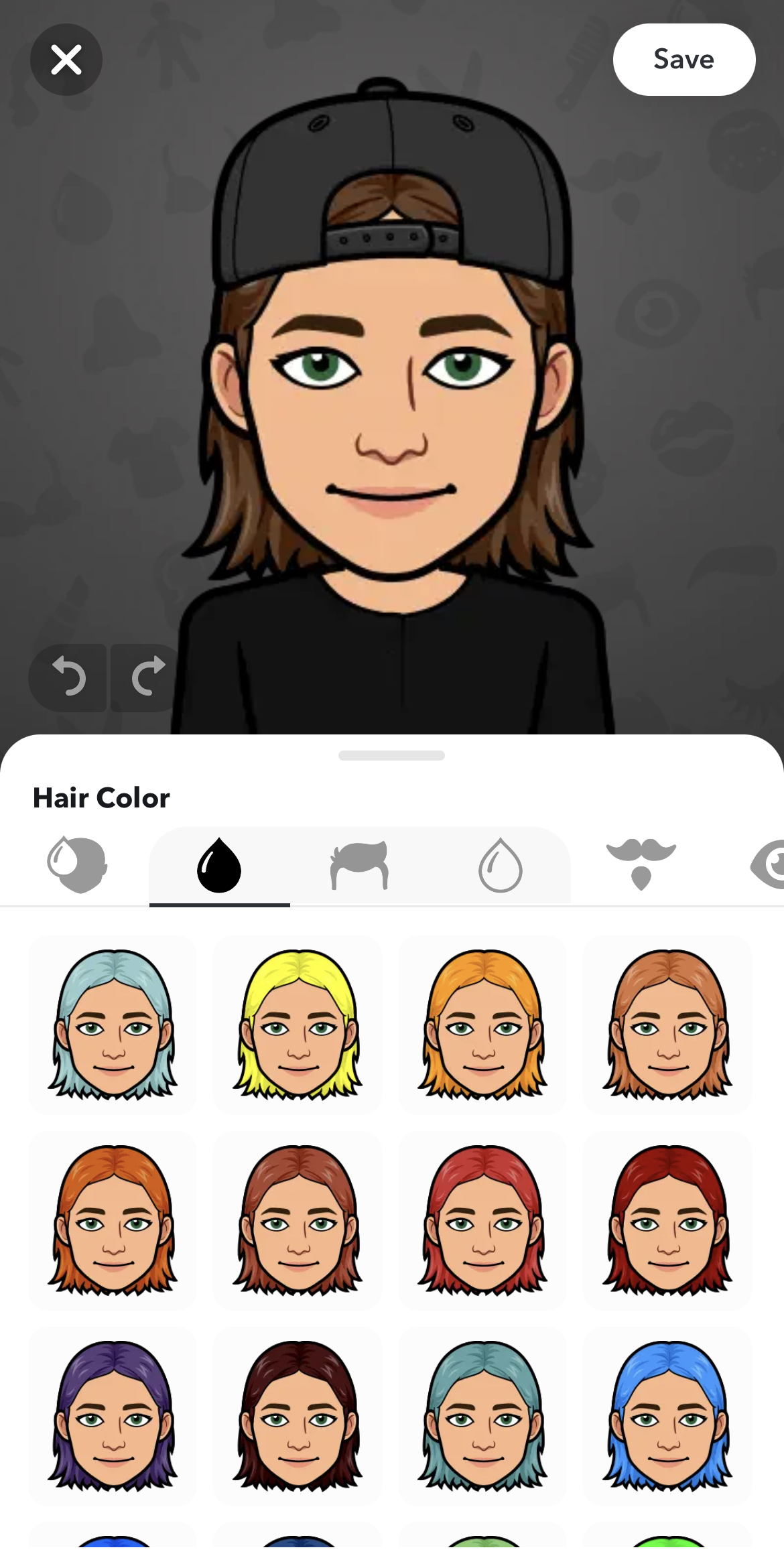

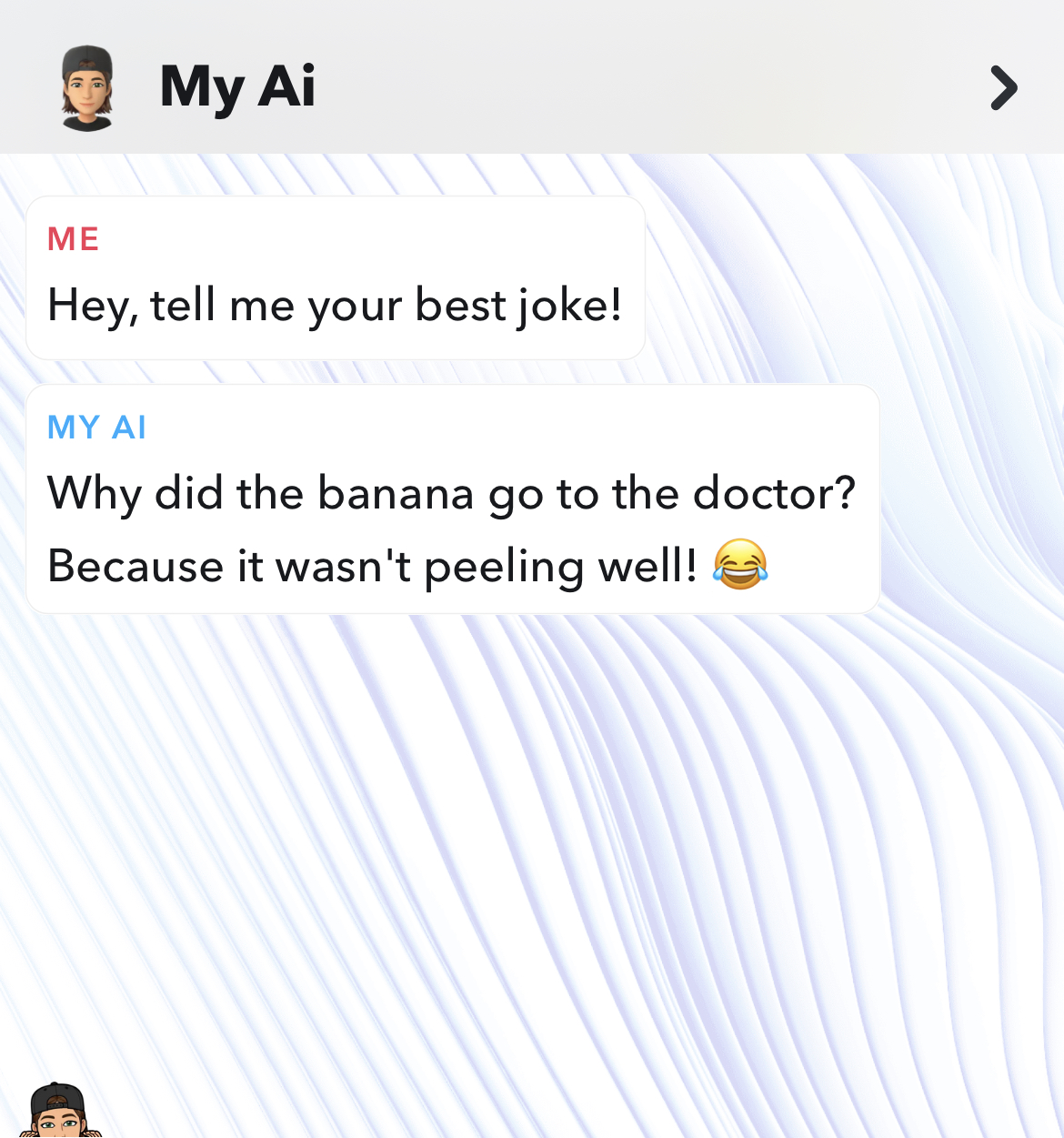

In simple terms, on Snapchat, AI is represented by a chatbot called “My AI,” accessible to every Snapchatter. There’s even an option to customize what the so-called “robot” looks like by transforming its physical appearance including skin color, hair, eyes, clothes, and much more. There is also the opportunity to change its name.

My AI can answer any question it’s asked. However, Snapchat Support also warns people, “You should always independently check answers provided by My AI before relying on any advice, and you should not share confidential or sensitive information.”

Sofia Costanzo, junior education major, had a fun experience with the app’s AI. “When I opened Snapchat and saw the random avatar pop up, I was a bit freaked out. I kept clicking on it thinking it was someone Snapchatting me, but it was only a robot. I started playing around with it and kept asking it funny questions, and I found it kind of entertaining to talk to,” she said.

It can be challenging to fully trust this feature because of the chance of there being inaccuracies or misinformation. Another important aspect to note is that Snapchat Support explained, “it’s possible My AI’s responses may include biased, incorrect, harmful, or misleading content.”

Snapchat doesn’t hide from the threats this new feature can pose and they highlight the importance of privacy by informing users that their data with My AI is deleted after 24 hours in the chat.

This fresh addition to Snapchat will continue to evolve and make mistakes. It’s already sparked anguish and conversation among parents.

Dr. Nune Grigoryan, assistant professor at Cabrini, emphasized that Snapchat should take accountability when problems with users arise. “I think that the platform that releases a feature has to carry responsibility for what that feature does to the users, especially if they aren’t trained or prepared to use that technology.”

The ultimate concerns

Samantha Murphy Kelly from CNN spoke with mother Lyndsi Lee, who wants her 13-year-old daughter to not touch the “My AI” feature. In the interview, Lee discussed how she was unsure of how to approach a conversation about the wildly different concept between humans vs. machines. Lee also mentioned that her daughter views both humans and a machine/robot as the same and with similar abilities.

The difference between an actual human and a “generated robotic response” is confusing, and some parents aren’t ready for that conversation or don’t know how to explain it themselves.

There’s also been criticism of My AI’s occasionally inappropriate and weird responses. Kelly wrote, “some Snapchat users who are bombarding the app with bad reviews in the app store and criticisms on social media over privacy concerns, ‘creepy’ exchanges and an inability to remove the feature from their chat feed unless they pay for a premium subscription.”

Privacy concerns are only natural for users to question, but hearing a strange response can be alarming.

“I think those concerns are legit because if you think about how most of the concerns are related to teens. Most of the articles we have seen about these concerns so far are about mental health and especially for young girls and women who are more susceptible. Somebody might have a conversation about not feeling well and then the chat can take them down the wrong path because it’s not a therapist and not a mental health support,” Grigoryan said.

As My AI and Snapchat grow there should be ongoing monitoring of its changes. But avid Snapchat users may have the final say; they will either love or dislike the feature.